Sipu Ruan 1, Weixiao Liu 2, Xiaoli Wang 1, Xin Meng 1, and Gregory Chirikjian 1,3

1 Department of Mechanical Engineering, National University of Singapore, Singapore

2 Department of Mechanical Engineering, Johns Hopkins University, Baltimore, MD, USA

3 Department of Mechanical Engineering, University of Delaware, Newark, DE, USA

Published in IEEE Transactions on Robotics (T-RO)

Introduction

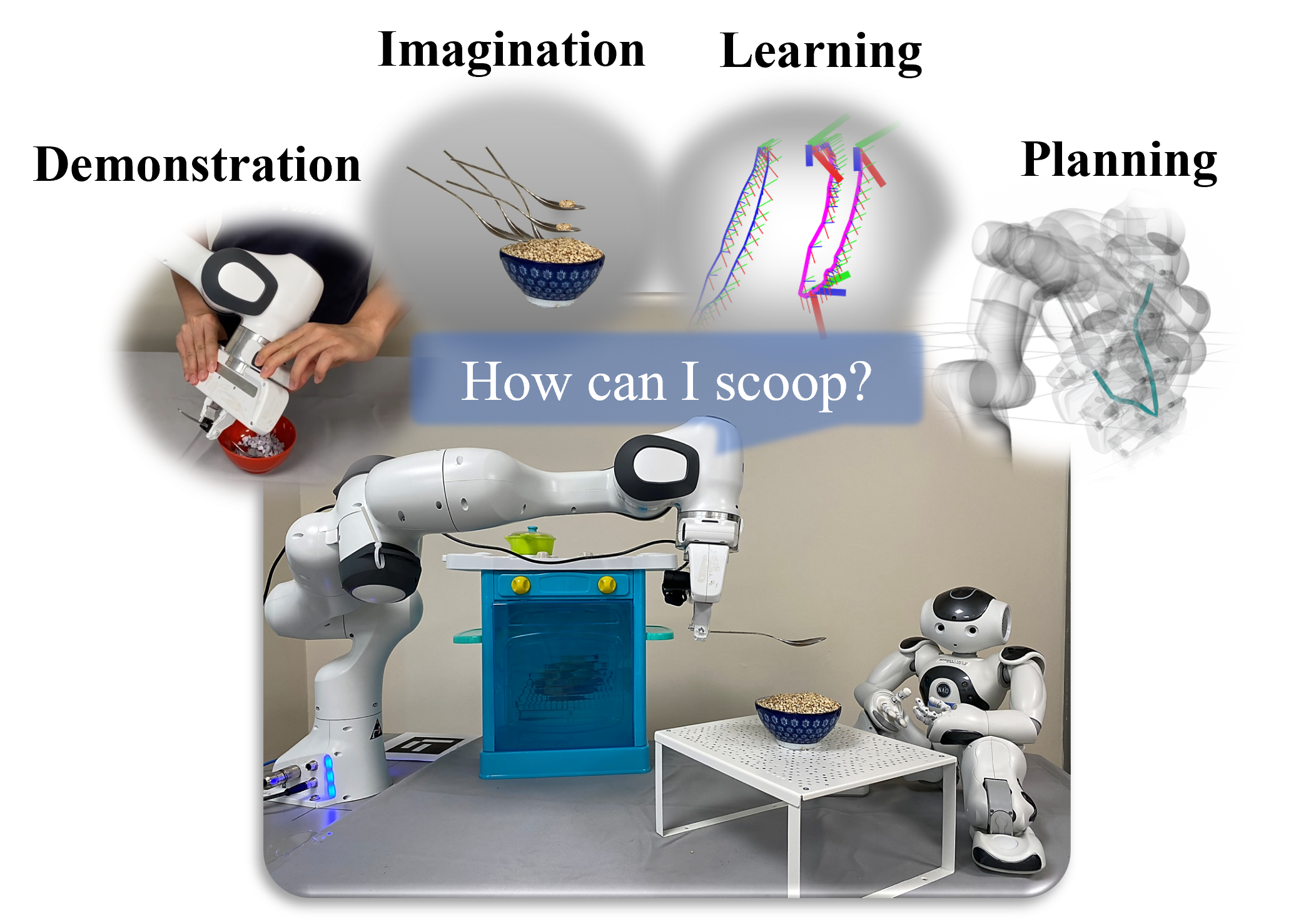

This paper proposes a learning-from-demonstration (LfD) method using probability densities on the workspaces of robot manipulators. The method, named PRobabilistically-Informed Motion Primitives (PRIMP), learns the probability distribution of the end effector trajectories in the 6D workspace that includes both positions and orientations. It is able to adapt to new situations such as novel via points with uncertainty and a change of viewing frame. The method itself is robot-agnostic, in that the learned distribution can be transferred to another robot with the adaptation to its workspace density. Workspace-STOMP, a new version of the existing STOMP motion planner, is also introduced, which can be used as a post-process to improve the performance of PRIMP and any other reachability-based LfD method. The combination of PRIMP and Workspace-STOMP can further help the robot avoid novel obstacles that are not present during the demonstration process. The proposed methods are evaluated with several sets of benchmark experiments. PRIMP runs more than 5 times faster than existing state-of-the-art methods while generalizing trajectories more than twice as close to both the demonstrations and novel desired poses. They are then combined with our lab’s robot imagination method that learns object affordances, illustrating the applicability to learn tool use through physical experiments.

This paper proposes a learning-from-demonstration (LfD) method using probability densities on the workspaces of robot manipulators. The method, named PRobabilistically-Informed Motion Primitives (PRIMP), learns the probability distribution of the end effector trajectories in the 6D workspace that includes both positions and orientations. It is able to adapt to new situations such as novel via points with uncertainty and a change of viewing frame. The method itself is robot-agnostic, in that the learned distribution can be transferred to another robot with the adaptation to its workspace density. Workspace-STOMP, a new version of the existing STOMP motion planner, is also introduced, which can be used as a post-process to improve the performance of PRIMP and any other reachability-based LfD method. The combination of PRIMP and Workspace-STOMP can further help the robot avoid novel obstacles that are not present during the demonstration process. The proposed methods are evaluated with several sets of benchmark experiments. PRIMP runs more than 5 times faster than existing state-of-the-art methods while generalizing trajectories more than twice as close to both the demonstrations and novel desired poses. They are then combined with our lab’s robot imagination method that learns object affordances, illustrating the applicability to learn tool use through physical experiments.

Links

- Paper

- Poster

- Code:

- Source code: MATLAB, Python

- Documentation: Python API reference

- Presentations

- Presented in RSS 2023 workshop on Learning for Task and Motion Planning

Supplementary Video

If the video cannot play, please visit Media.

Features of PRIMP:

1) Adaptation to new situations

- Novel via-point with uncertainty

- The equivariance property under the change of viewing frame

- Avoid previously unseen obstacles while keeping the key features (The proposed Workspace-STOMP)

2) Robot-agnostic

- Skills can be easily transferred to another robot by adapting to its workspace density

3) Combines with affordance learning

- Learning object affordance through physical interaction so that the skill can be applied to novel objects with the same affordance

Citation

S. Ruan, W. Liu, X. Wang, X. Meng and G. S. Chirikjian, "PRIMP: PRobabilistically-Informed Motion Primitives for Efficient Affordance Learning from Demonstration," in IEEE Transactions on Robotics, doi: 10.1109/TRO.2024.3390052.

BibTex

@ARTICLE{10502164,

author={Ruan, Sipu and Liu, Weixiao and Wang, Xiaoli and Meng, Xin and Chirikjian, Gregory S.},

journal={IEEE Transactions on Robotics},

title={PRIMP: PRobabilistically-Informed Motion Primitives for Efficient Affordance Learning from Demonstration},

year={2024},

volume={},

number={},

pages={1-20},

keywords={Trajectory;Robots;Probabilistic logic;Planning;Affordances;Task analysis;Manifolds;Learning from Demonstration;Probability and Statistical Methods;Motion and Path Planning;Service Robots},

doi={10.1109/TRO.2024.3390052}}